|

Seokhyeon Hong Hello, world! I am a Ph.D. student at KAIST Visual MediaLab, advised by Prof. Junyong Noh. My research interests lie in computer graphics and character animation, including generation, editing, in-betweening, retargeting, rigging, and all the fun stuff that makes characters move. |

|

Publications |

|

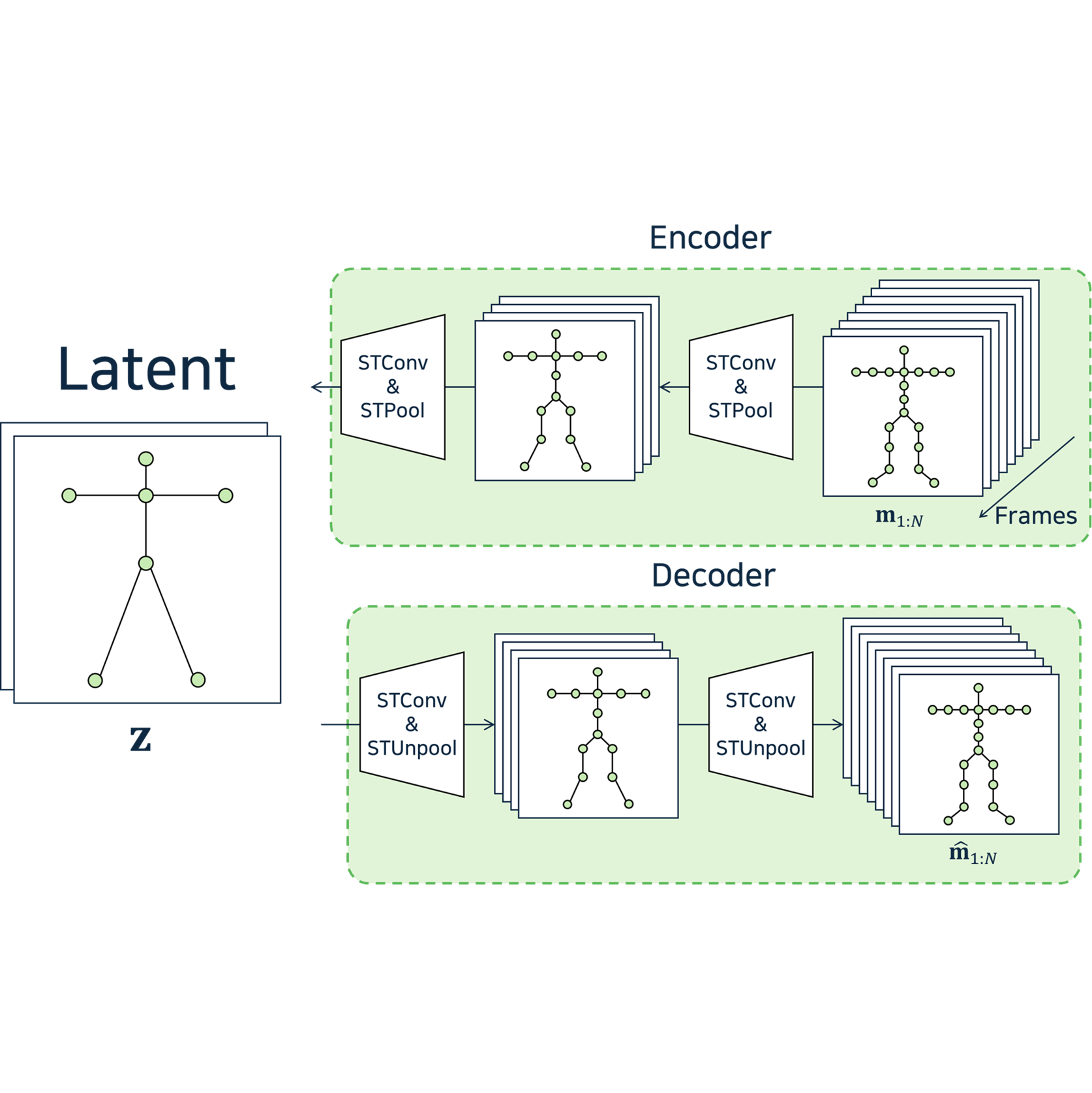

CVPR 2025 project page / paper / code / video Skeleton-aware latent diffusion that incorporates interactions between skeletal joints, motion frames, and textual words for text-driven motion generation and zero-shot editing. |

|

|

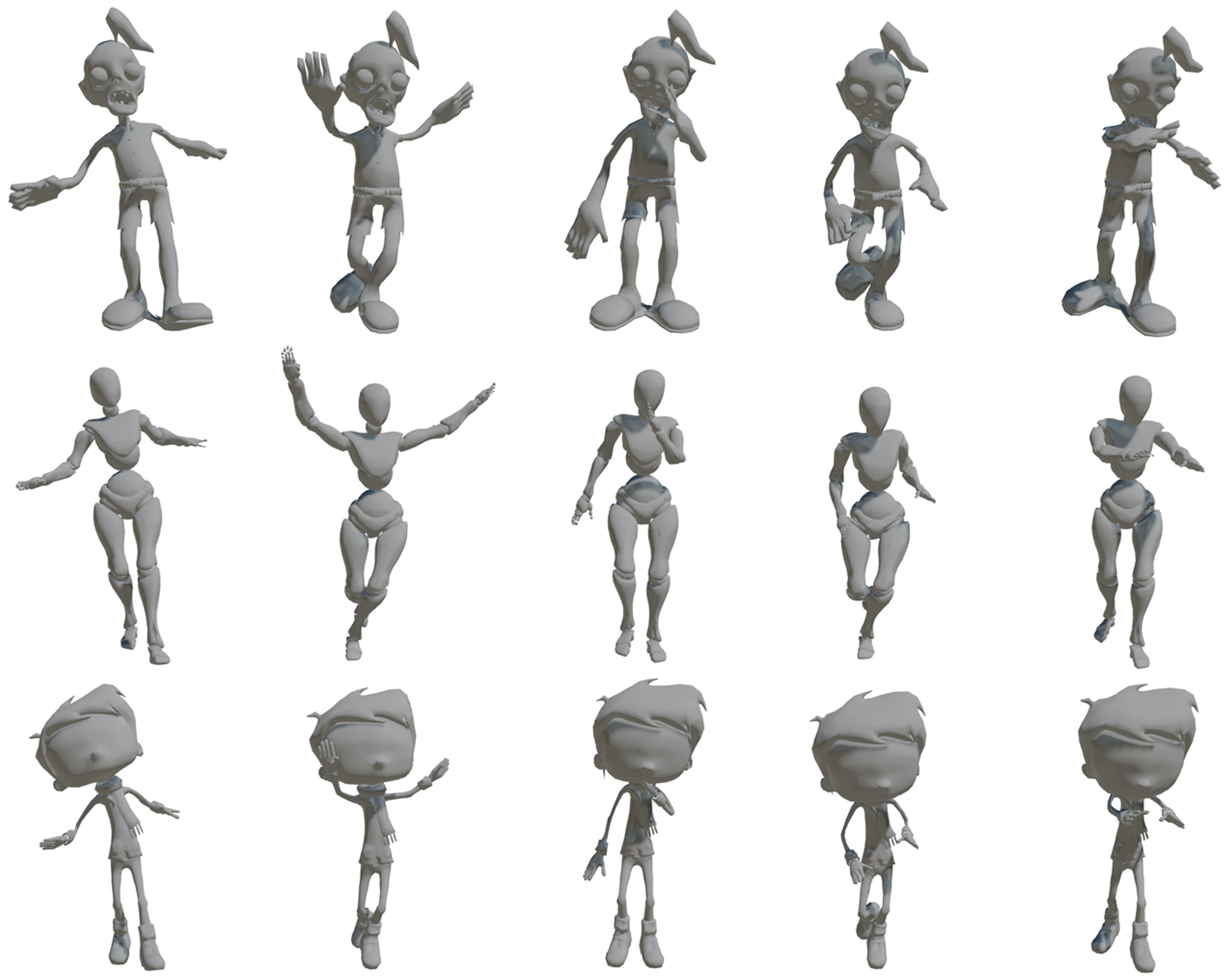

Kwan Yun, CVPR 2025 project page / paper / code Motion in-betweening for arbitrary characters using video diffusion models fine-tuned with ICAdapt and motion-video mimicking, recuding dependency on character-specific datasets. |

|

(* equal contribution) Eurographics 2025; CGF project page / paper / code / video Rigging and skinning 3D character meshes by leveraging cross-attention modules and 2D generative priors for robust generalization across diverse skeletal and mesh configurations. |

|

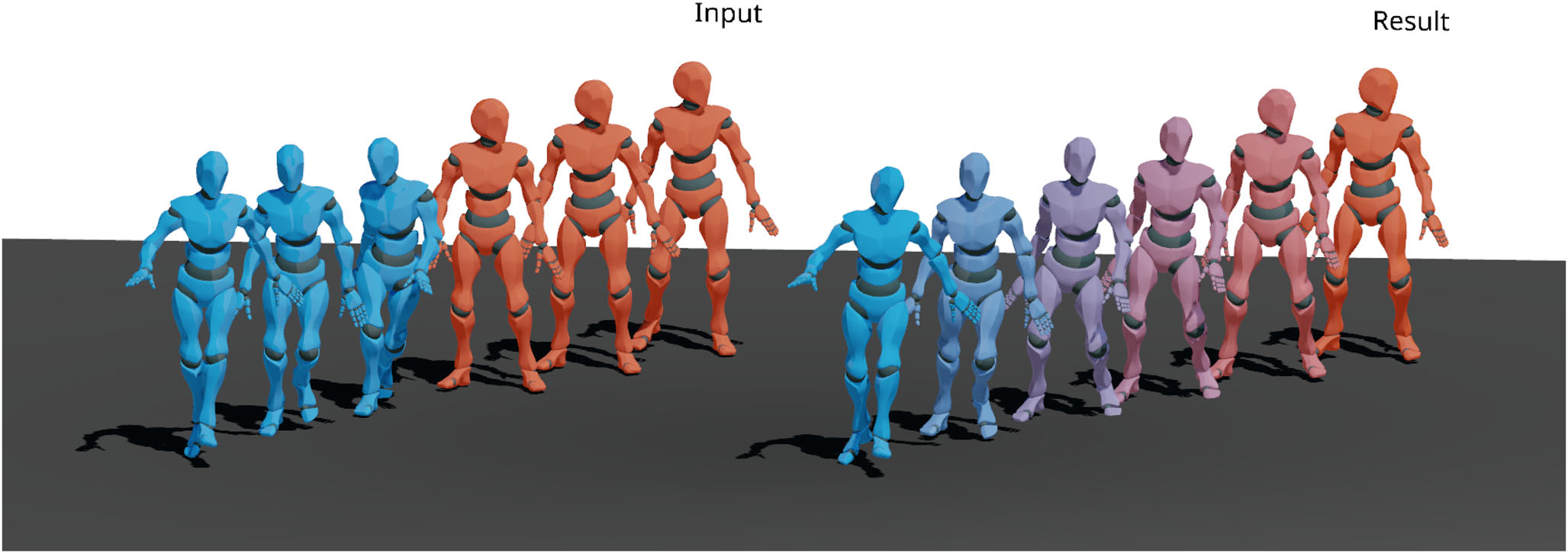

Inseo Jang, Soojin Choi, SIGGRAPH Asia 2024; TOG project page / paper / video Retargeting interaction motions of two skinned characters via sparse mesh-agnostic geometry representations and spatio-cooperative transformers. |

|

SCA 2024; CGF paper / code / video Keyframe prediction using two-stage hierarchical transformers for long-term motion in-betweening. |

|

Haemin Kim, Kyungmin Cho, Eurographics 2024; CGF paper / video Training a recurrent motion refiner for neural locomotion stitching. |

Projects |

|

aPyOpenGL

github A python library for motion data processing with nice-looking visualization using OpenGL. It provides several functionalities not only motion-related functions (e.g. forward kinematics) but also auxiliary functions such as rotation conversion, file I/O, visualization with skinned mesh, and more. Most of my research projects have utilized this library for data processing and visualization. |